Hi there!

Some time ago I published a post about doing a sentiment analysis on Twitter. I used two wordlists to do so; one with positive and one with negative words. For the first try of a sentiment analysis it is surely a good way to start but if you want to receive more accurate sentiments you should use an external API. And that´s what we do in this tutorial. But before we start you should take a look at the authentication tutorial and go through the steps.

The Viralheat API

The Viralheat sentiment API receives more than 300M calls per week. And this huge amount of calls makes this API become better and better. Everytime a company for example using this API notices that a tweet was analyzed wrong, lets say it was a positive tweet but the API said it is neutral, the user can correct it and the API can use this knowledge for the next time.

Viralheat registration

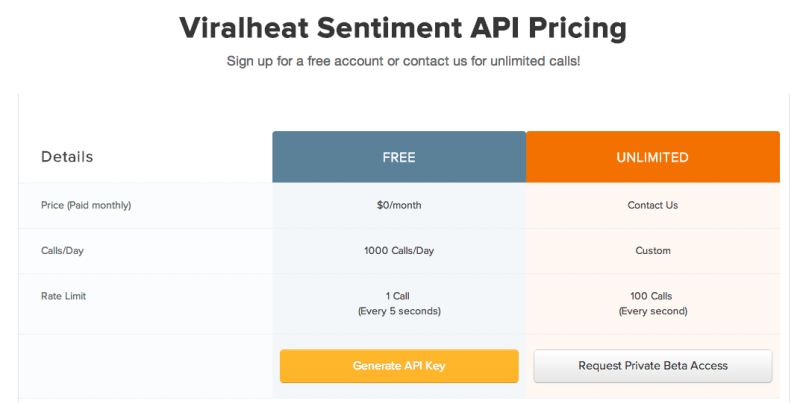

You can reach the Viralheat API with a free account. This account includes 1000calls/day what should be enough for starting. Just go to the Viralheat developer Center and register yourself: https://app.viralheat.com/developer

Then you can generate your free API key we´ll need later.

Functions

The getSentiment() function

First import the needed packages for our analysis:

library(twitteR) library(RCurl) library(RJSONIO) library(stringr)

The getSentiment() function handles the queries we send to the API and splits the positive and negative statements out of the JSON reply and returns them in a list.

getSentiment <- function (text, key){

library(RCurl);

library(RJSONIO);

text <- URLencode(text);

#save all the spaces, then get rid of the weird characters that break the API, then convert back the URL-encoded spaces.

text <- str_replace_all(text, "%20", " ");

text <- str_replace_all(text, "%\\d\\d", "");

text <- str_replace_all(text, " ", "%20");

if (str_length(text) > 360){

text <- substr(text, 0, 359);

}

data <- getURL(paste("https://www.viralheat.com/api/sentiment/review.json?api_key=", key, "&text=",text, sep=""))

js <- fromJSON(data, asText=TRUE);

# get mood probability

score = js$prob

# positive, negative or neutral?

if (js$mood != "positive")

{

if (js$mood == "negative") {

score = -1 * score

} else {

# neutral

score = 0

}

}

return(list(mood=js$mood, score=score))

}

The clean.text() function

We need this function because of the problems occurring when the tweets contain some certain characters and to remove characters like “@” and “RT”.

clean.text <- function(some_txt)

{

some_txt = gsub("(RT|via)((?:\\b\\W*@\\w+)+)", "", some_txt)

some_txt = gsub("@\\w+", "", some_txt)

some_txt = gsub("[[:punct:]]", "", some_txt)

some_txt = gsub("[[:digit:]]", "", some_txt)

some_txt = gsub("http\\w+", "", some_txt)

some_txt = gsub("[ \t]{2,}", "", some_txt)

some_txt = gsub("^\\s+|\\s+$", "", some_txt)

# define "tolower error handling" function

try.tolower = function(x)

{

y = NA

try_error = tryCatch(tolower(x), error=function(e) e)

if (!inherits(try_error, "error"))

y = tolower(x)

return(y)

}

some_txt = sapply(some_txt, try.tolower)

some_txt = some_txt[some_txt != ""]

names(some_txt) = NULL

return(some_txt)

}

Let´s start

Ok now we have our functions, all packages and the API key.

In the first step we need the tweets. We do this with searchTwitter() function as usual.

# harvest tweets

tweets = searchTwitter("iphone5", n=200, lang="en")

In my example I used the keyword “iphone5”. Of course you can use whatever you want.

In the next steps we have to extract the text from the text and remove the characters with the clean_tweet() function. We just call these functions with:

tweet_txt = sapply(tweets, function(x) x$getText())

tweet_clean = clean.text(tweet_txt)

mcnum = length(tweet_clean)

tweet_df = data.frame(text=tweet_clean, sentiment=rep("", mcnum), score=1:mcnum, stringsAsFactors=FALSE)

Do the analysis

We come to our final step: the analysis. We call the getSentiment() with the text of every tweet and wait for the answer to save it to a list. So this can cost some time. Just replace API-KEY with your Viralheat API key.

sentiment = rep(0, mcnum)

for (i in 1:mcnum)

{

tmp = getSentiment(tweet_clean[i], "API-KEY")

tweet_df$sentiment[i] = tmp$mood

tweet_df$score[i] = tmp$score

}

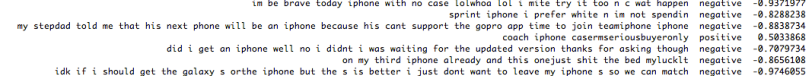

That´s it! Now we have our analyzed tweets in the tweet_df list and you can show your results with

tweet_df

Note:

Sometimes the API breaks when receiving certain character. I couldn´t figure out why , but as soon as I know it I will update this tutorial.

Please also note that sentiment analysis can just give you a roughly overview of the mood.

tweet clean not found is a error

Thanks for your comment! I forgot two lines of code. But now everything should work fine

Pingback: Sentiment Analysis on Twitter with Datumbox API | julianhi's Blog

When am trying this am getting the following error while analyzing

Error in fromJSON(content, handler, default.size, depth, allowComments, :

invalid JSON input

Error in lapply(X = X, FUN = FUN, …) : object ‘mc_tweets’ not found

My tweet_df is coming back with no sentiment scores… suggestion?

Hey Ray,

please replace “mc_tweets” with “tweets”.

I also changed it in the code.

Does this work for you?

Regards

Yes, I made that change. It was mostly for your reference. More concerning is that my df doesn’t have sentiment scores in it. Error in function (type, msg, asError = TRUE) :

SSL certificate problem, verify that the CA cert is OK. Details:

error:14090086:SSL routines:SSL3_GET_SERVER_CERTIFICATE:certificate verify failed. I’ve modified # harvest tweets

tweets = searchTwitter(“#energyefficiency”, n=20, lang=”en”, cainfo=”cacert.pem”), so I think it’s the viral heat API not the twitter api. suggestions?

Hey Ray,

for me everything works, I just tried it. There also weren´t any changes in the Viralheat Sentiment API. So I think it has something to do with your environment and especially the SSL settings.

Did you use my Twitter authentication tutorial? It sets the SSL settings in the twitCred object. So there is no need to specify the cacert file when you call the searchTwitter function.

Could you please post your R in- and output for me?

Regards

Thanks, Julianhi. I went back and followed your twitter tutorial and had a lot more success. It’s weird that the API breaks when it gets characters it doesn’t like. I’m going to try datumbox next. Thanks for your help, and really cool work!

I was getting the “Error in fromJSON(content, handler, default.size…” error and had to change the URL for the ViralHeat API from “https://www.viralheat.com/api/sentiment/review.json?api_key=” to “https://app.viralheat.com/social/api/sentiment?api_key=”. Now I get sentiment back from ViralHeat. Additionally, on the free account ViralHeat is only allowing 1 API call every 5 sec so the loop for getting sentiment needs some form of wait/sleep between calls. I’m using Sys.sleep(6) because Sys.sleep(5) resulted in my still getting throttled.

By the way I really the like the sentiment word cloud example you did with DatumBox.

Thank you very much!

I will add that to the tutorial 🙂

Regards